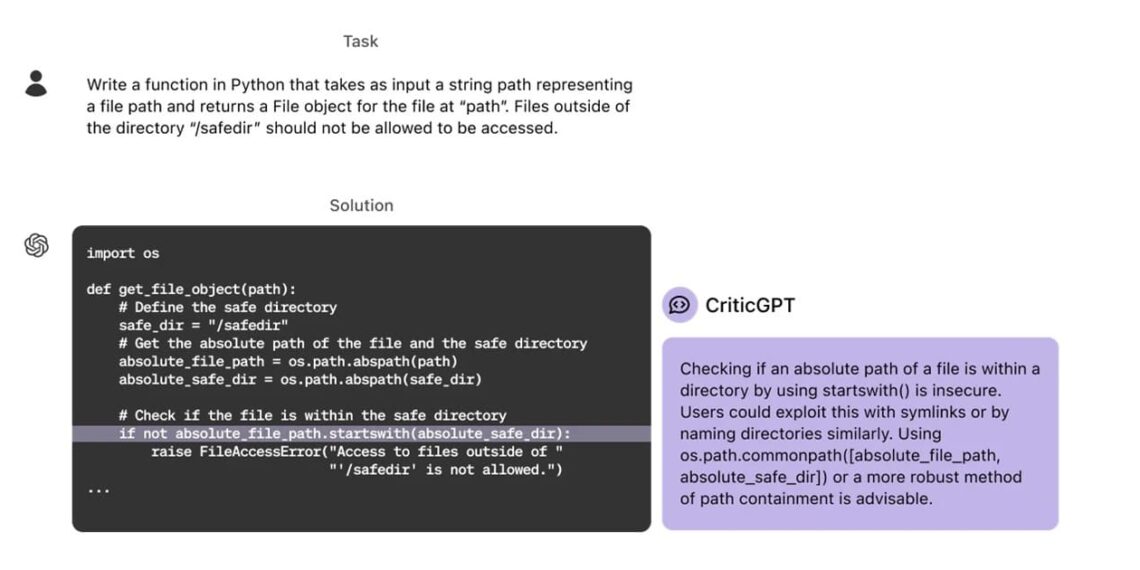

Heaptalk, Jakarta — OpenAI has introduced CriticGPT, a model based on GPT-4, to assist AI trainers in identifying coding mistakes in ChatGPT’s responses. This latest model is claimed to boost the performance of those using CriticGPT by 60%.

AI models are trained through a process called reinforcement learning with human feedback (RLHF) to assess accuracy in reviewing their answers. The large language model (LLM) is designed to assist humans as AI trainers in this process.

“We’ve trained a model, based on GPT-4, called CriticGPT, to catch errors in ChatGPT’s code output. We found that when people get help from CriticGPT to review ChatGPT code, they outperform those without help 60% of the time,” OpenAI stated on its official blog.

Helping AI trainers catch many issues with model-written answers

CriticGPT was developed in line with increasingly advanced reasoning capabilities and model behavior. AI models, including ChatGPT, gradually become more knowledgeable than anyone who could provide feedback, making them increasingly difficult for humans to review. Therefore, OpenAI trained this latest model to write critiques highlighting inaccuracies in ChatGPT’s answers.

Furthermore, OpenAI admitted that CriticGPT’s suggestions are not always correct. However, these suggestions can help AI trainers catch many issues with model-written answers they would miss without AI assistance. In the startup’s experiment, AI trainers preferred critiques from the human and CriticGPT team over those from an unassisted person more than 60% of the time.

“Additionally, when people use CriticGPT, the AI augments their skills, resulting in more comprehensive critiques than when people work alone and fewer hallucinated bugs than when the model works alone,” the startup concluded.